Bio

Ben Laufer is a PhD candidate in the Bowers School of Computing and Information Science at Cornell Tech. He is advised by Jon Kleinberg and Helen Nissenbaum and affiliated with the AI, Policy, and Practice Group and the Digital Life Initiative (DLI). His research studies how data-driven and AI technologies behave, how they interact with people and institutions, and how they should be governed. He draws on tools from network science, game theory, statistics, and ethics, law, and policy to build models and measurements for understanding the social impacts of computing. Before Cornell, he worked as a data scientist at Lime, and he earned his B.S.E. in Operations Research and Financial Engineering from Princeton University.

Ben's research has been supported by a LinkedIn PhD Fellowship and a DLI Doctoral Fellowship. He has also spent time as a research intern at Microsoft Research with the Fairness, Accountability, Transparency, and Ethics in AI group. He has received three “Rising Star” recognitions, most recently as a Rising Star in Data Science at Stanford.

Projects

Anatomy of a Machine Learning Ecosystem

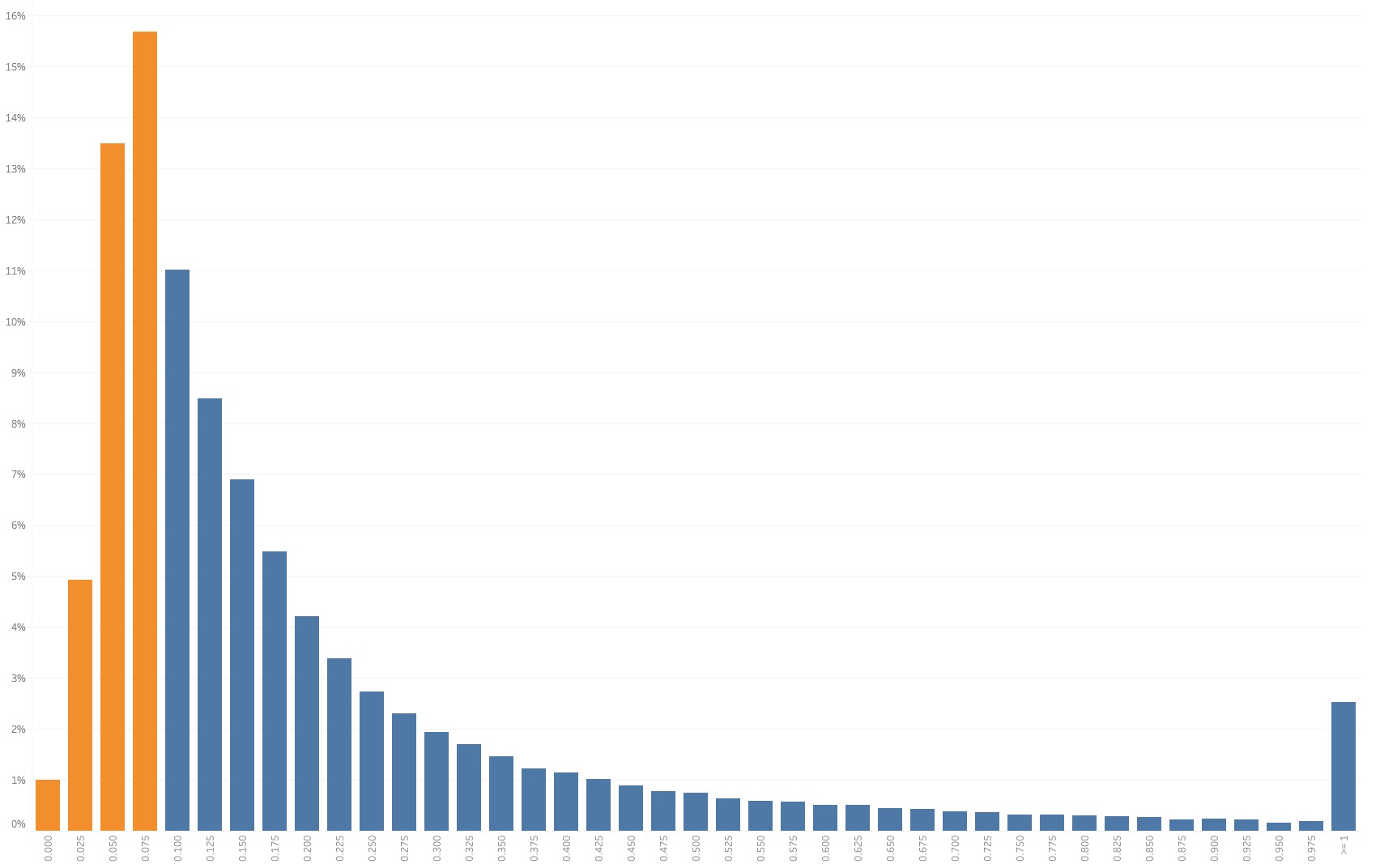

Modern AI development often looks less like single-model engineering and more like an ecosystem: models are adapted, remixed, and fine-tuned into sprawling “family trees.” We analyze 1.86 million Hugging Face models and map fine-tuning lineages at ecosystem scale, then use an evolutionary-biology lens to measure trait inheritance, “genetic” similarity in metadata/model cards, and directed mutation over time. Our observations reveal ecosystem dynamics over model licenses, documentation practices, language support, and more.

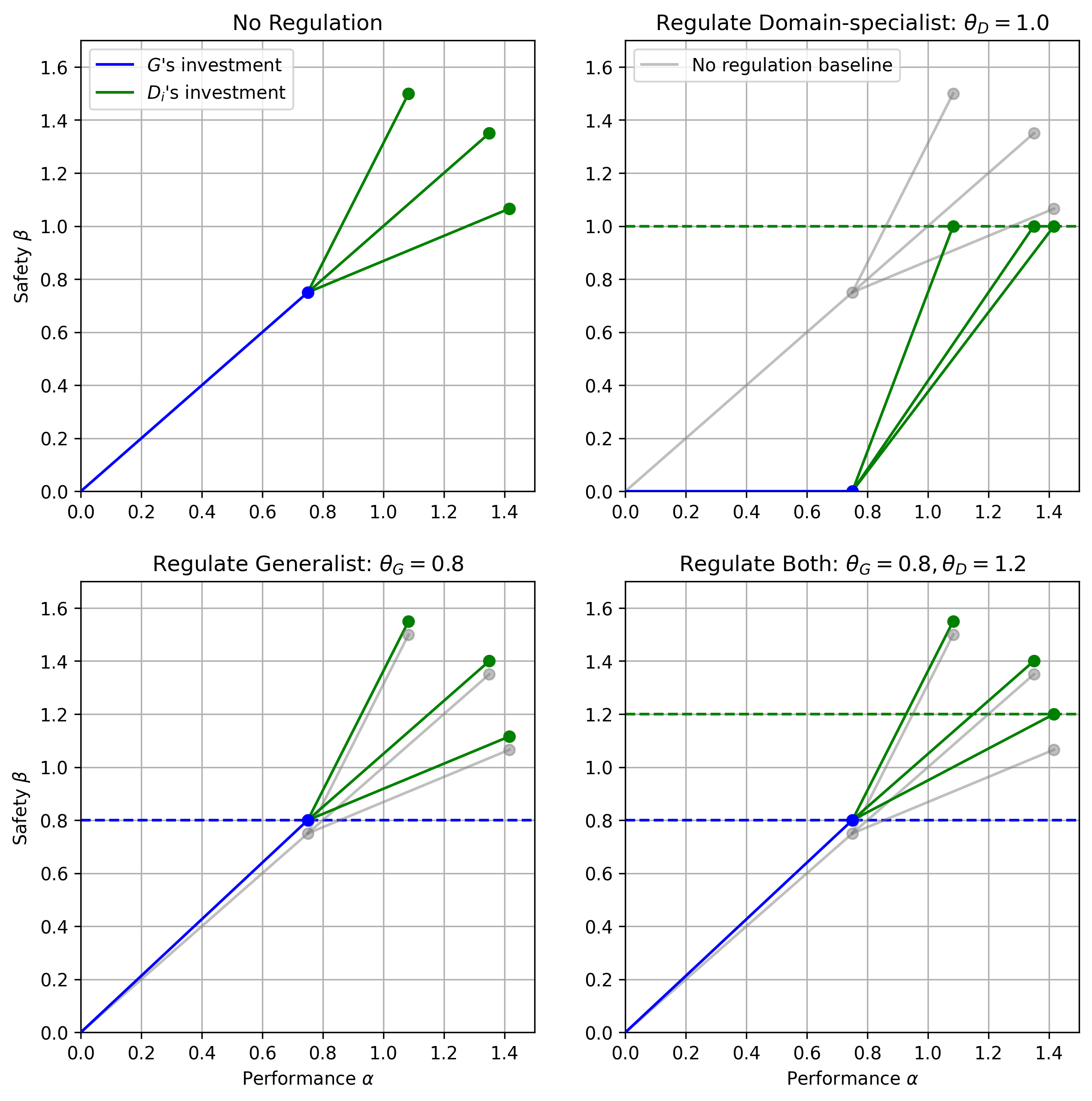

AI Safety Regulation

How should safety rules for AI be designed when we can’t easily observe the consequences in advance? We build a strategic model of an AI development chain, where a regulator sets minimum safety standards and penalties, and the technology trades off safety and performance. When we analyze the model, we find that weak regulation focused mainly on downstream specialists can backfire and reduce safety, while stronger, well-placed regulation can act as a commitment device that improves both safety and performance, sometimes even benefiting all parties relative to no regulation.

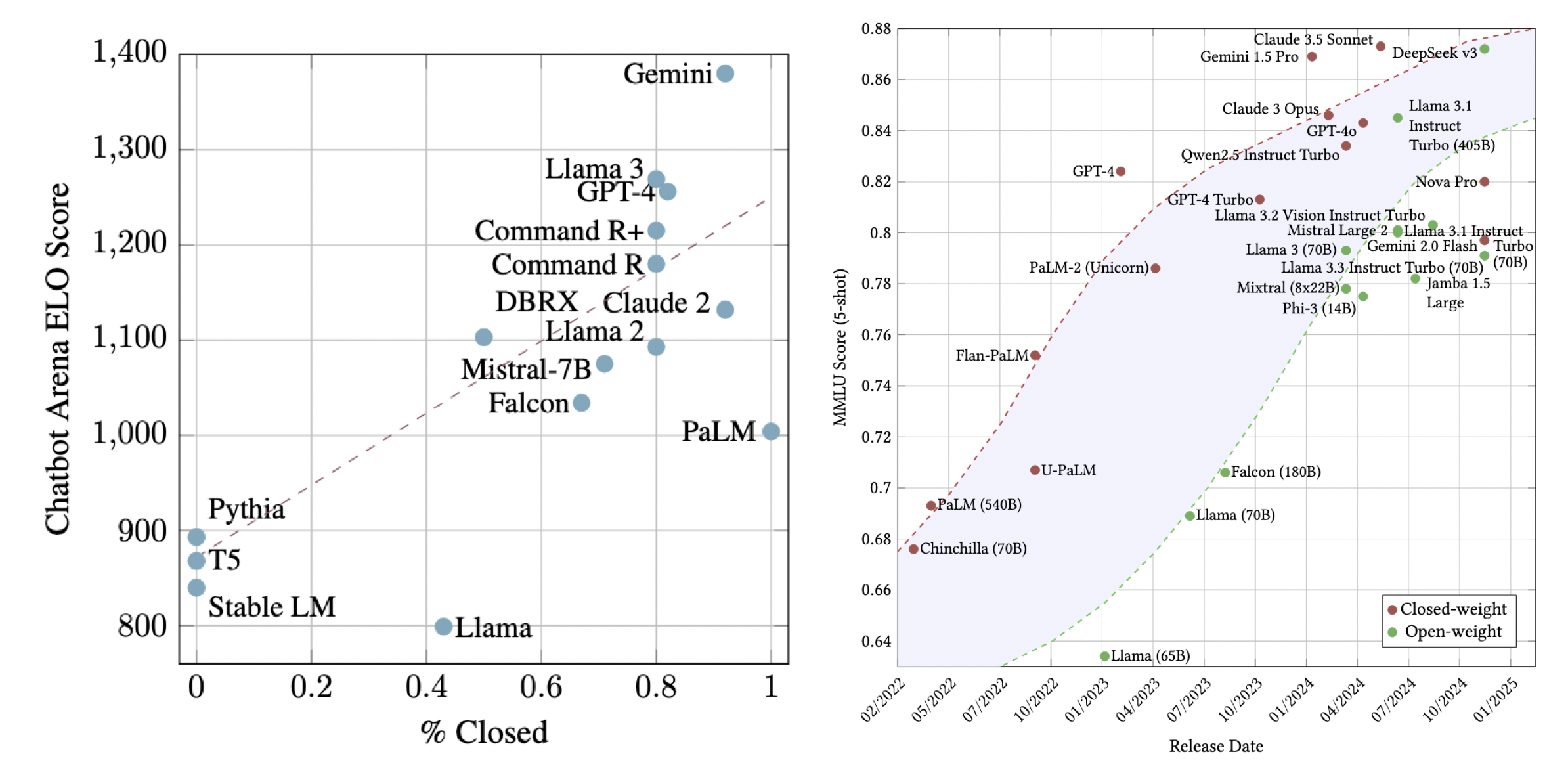

Modelling AI Openness

Policy regimes like the EU AI Act incentivize “open-source” AI models, but the operational definition of openness is still contested, and the incentives can be subtle. We model how a regulator’s openness standard shapes the behavior of a generalist who decides how to release a foundation model and a specialist who fine-tunes it for downstream use. The results characterize equilibria under different open-source thresholds and penalties, clarifying when each lever meaningfully changes release strategies. The broader goal is to offer a theoretical foundation for designing openness rules that align economic incentives with governance goals.

Published at NeurIPS

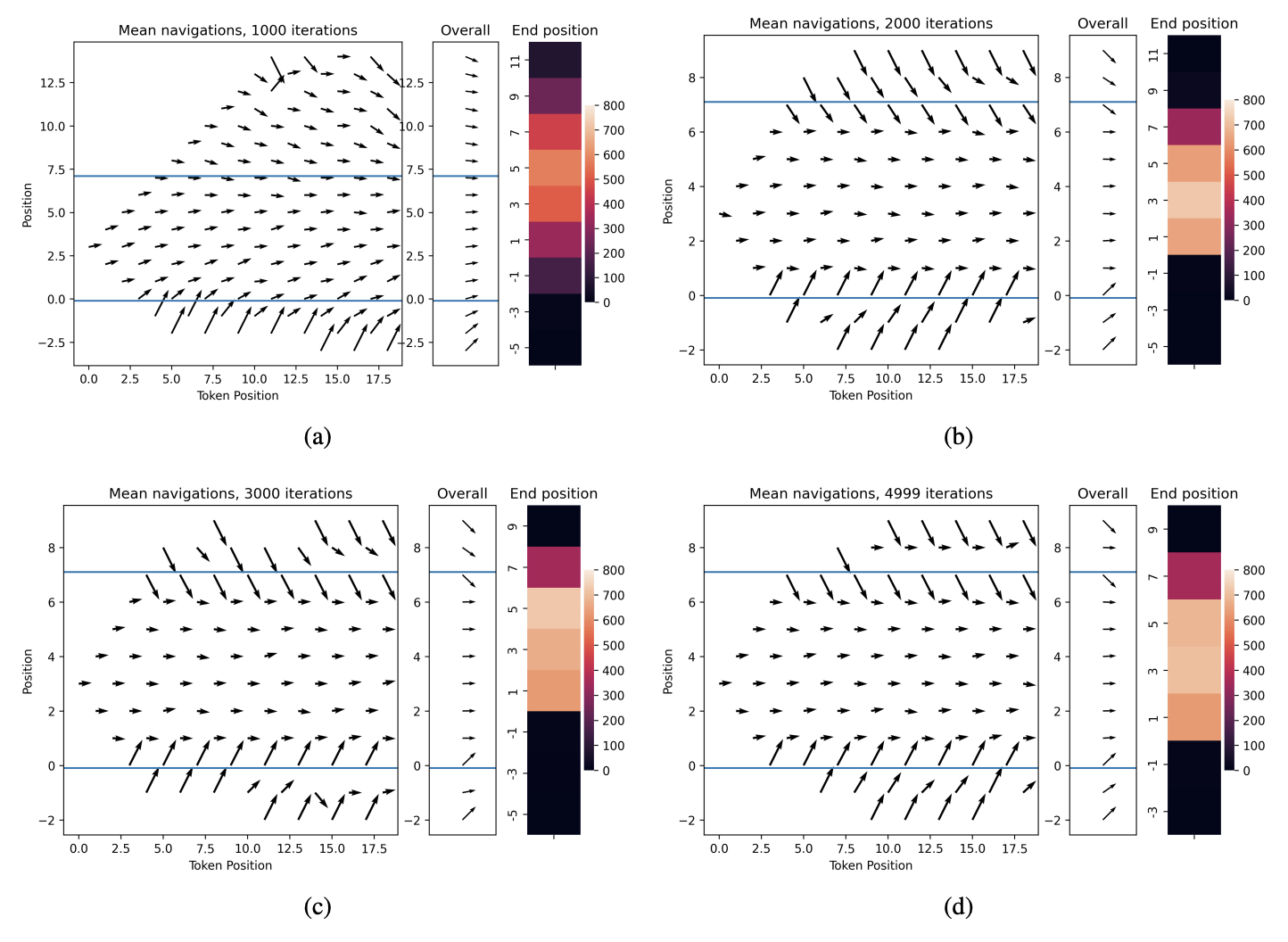

Mathematical Foundations for Responsible AI

Many responsible AI goals can be framed as rule-following: obeying grammatical constraints, honoring safety filters, or avoiding toxic outputs. We introduce a principled framework for evaluating rule compliance in language models for rules expressible as deterministic finite automata (DFAs). The core idea is an epsilon–delta notion of compliance—models should behave similarly on different prefixes that correspond to the same DFA state, yet meaningfully distinguish prefixes that correspond to different states. We operationalize this by defining ``Myhill–Nerode Divergence,'' and we validate the framework experimentally by training transformers on synthetic languages—opening the door to extensions beyond regular languages and to more reliable evaluation and control.

Less Discriminatory Algorithms

Disparate impact doctrine gives the law a powerful handle on discriminatory algorithmic decisions, but turning its “less discriminatory alternative” concept into something operational raises hard questions. In this project, we formalize what it means to search for alternative models that reduce disparities while meeting the same business needs, and we surface fundamental limits of purely quantitative definitions when held-out data is unavailable. Our conclusion is constructive: workable standards must incorporate notions of “reasonableness,” and (despite real constraints) proactive search for candidate alternatives can be feasible in a formal sense, giving firms and plaintiffs a shared framework for identifying better models.

Fine-Tuning Games

There is palpable excitement about AI models that are trained, supposedly for “general purpose.” But in order for these technologies to reach the market, they must first be tweaked and fine-tuned for particular, domain-specific use. In this project, we put forward a model of this adaptation process, highlighting the latent strategic dynamics that arise when one entity builds a general technology that other entities may tune and specialize. Our analysis suggests how some domains will or won’t adapt a general-purpose model. Depending on the cost of investment, a domain might either “abstain,” “contribute,” or “free-ride.”

Algorithmic Amplification

Published in a Knight First Ammendment Institute Essay Series

A handful of technology companies increasingly control the audiences reached by posts and content on the internet. This reality may create grave problems and leave our society acutely vulnerable. [Image credit: Daniel Herzberg]

Optimization's Neglected Normative Commitments

Optimization is offered as a decision-aid to resolve high-stakes, real-world decisions. In this work, we dissect the normative assumptions and commitments that can be implicit in the use of optimization. We point to a number of neglected problem cases and pitfalls that can and do arise.

Equity in City Services

Early work accepted to 2022 ICML Workshop on Responsible Decision-Making in Dynamic Environments

Every day, cities need to allocate resources and respond to resident needs. They aim to do so efficiently and equitably. Through a partnership with New York City’s Department of Parks and Recreations, we develop methods to test and evaluate whether the city is responding to requests effectively.

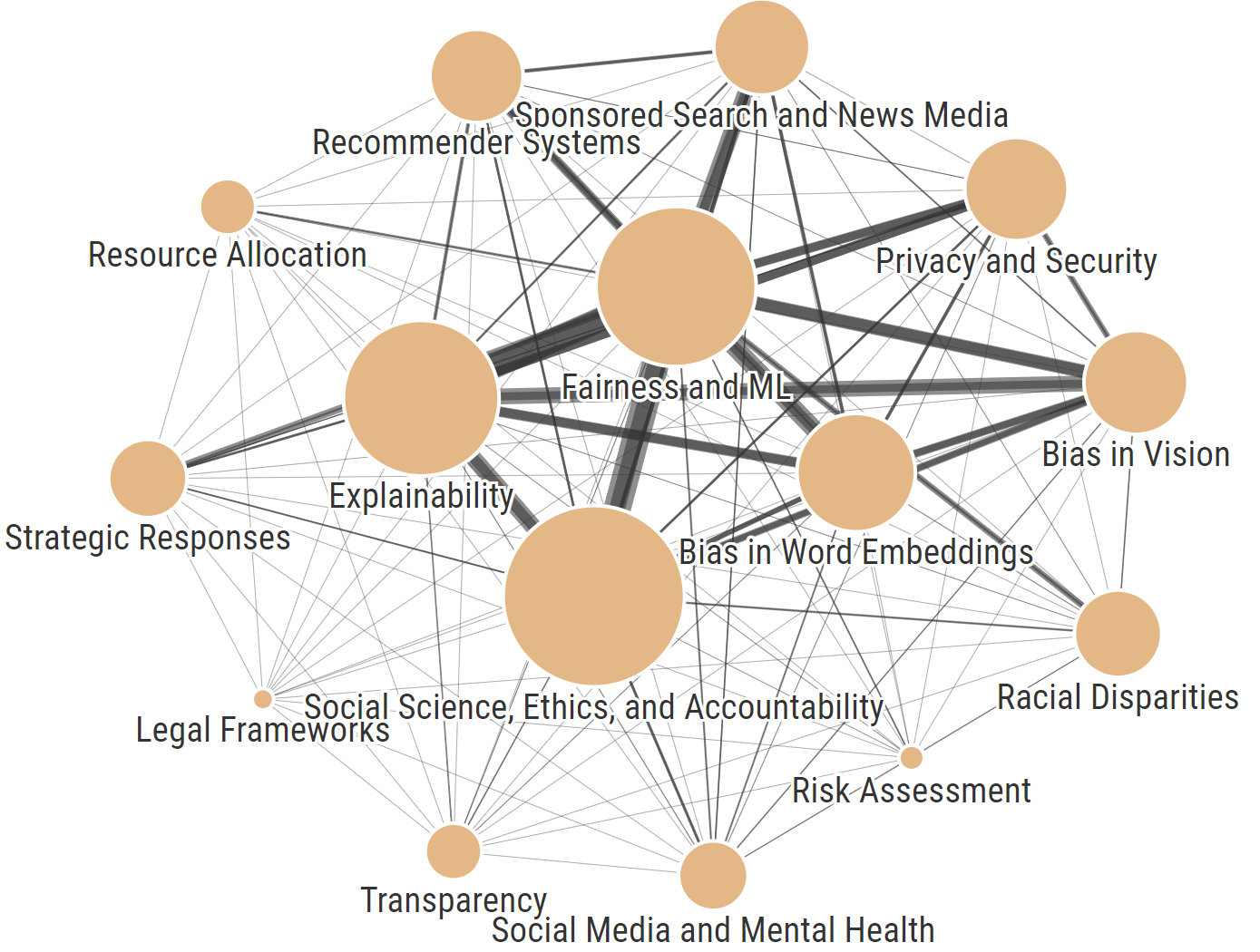

Four Years of FAccT

How can community members in a new scholarly field critically analyze their own research directions, shortcomings, and future prospects? In this work, our team uses reflexive, participatory mixed-methods to understand the borgeoning research topic known as Fairness, Accountability, and Transparency for Socio-technical Systems.

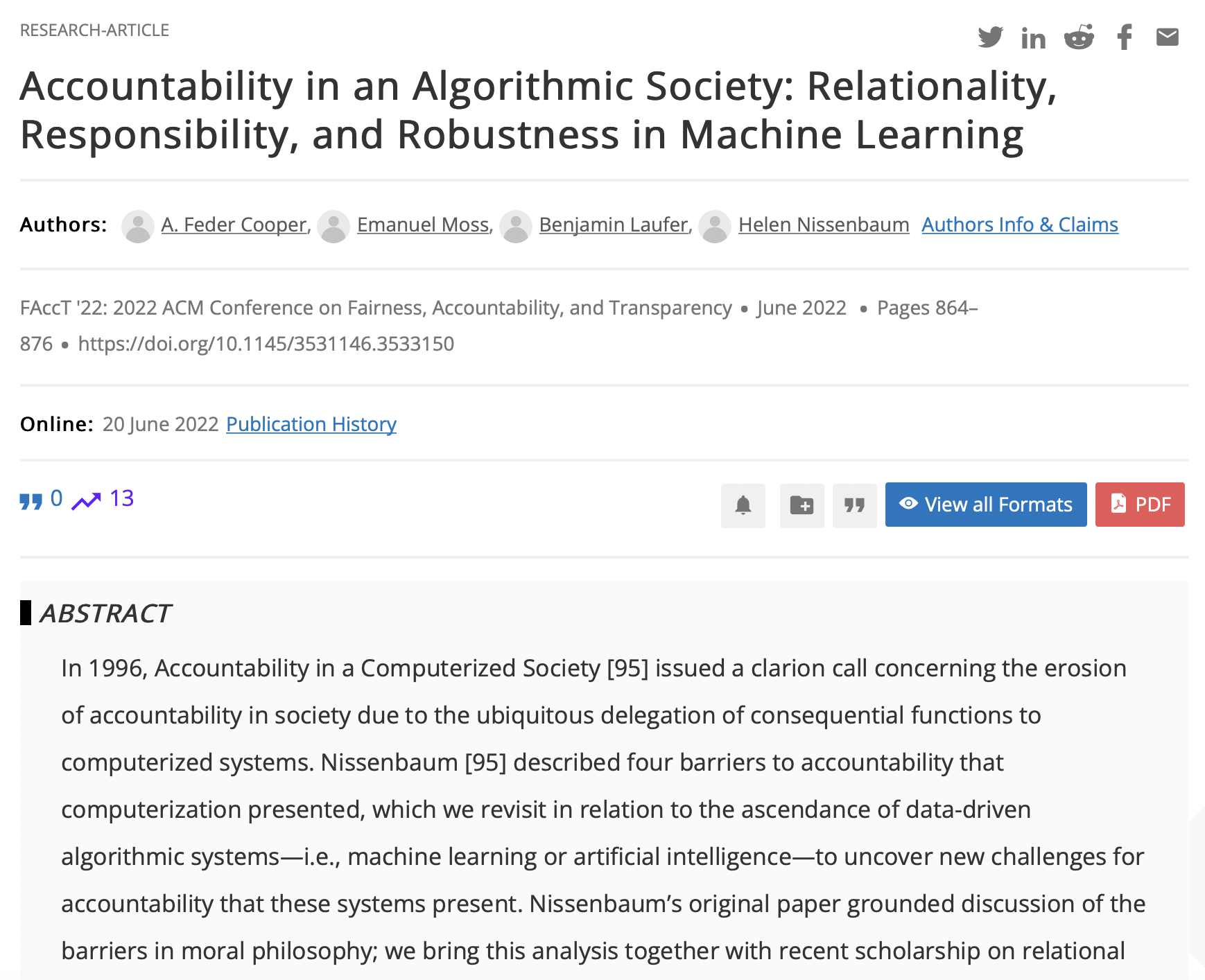

Accountability in an Algorithmic Society

Artificial intelligence and machine learning technologies make it difficult to establish accountability. In a new paper, we discuss why accountability is important, which barriers exist, and what we can do about it.

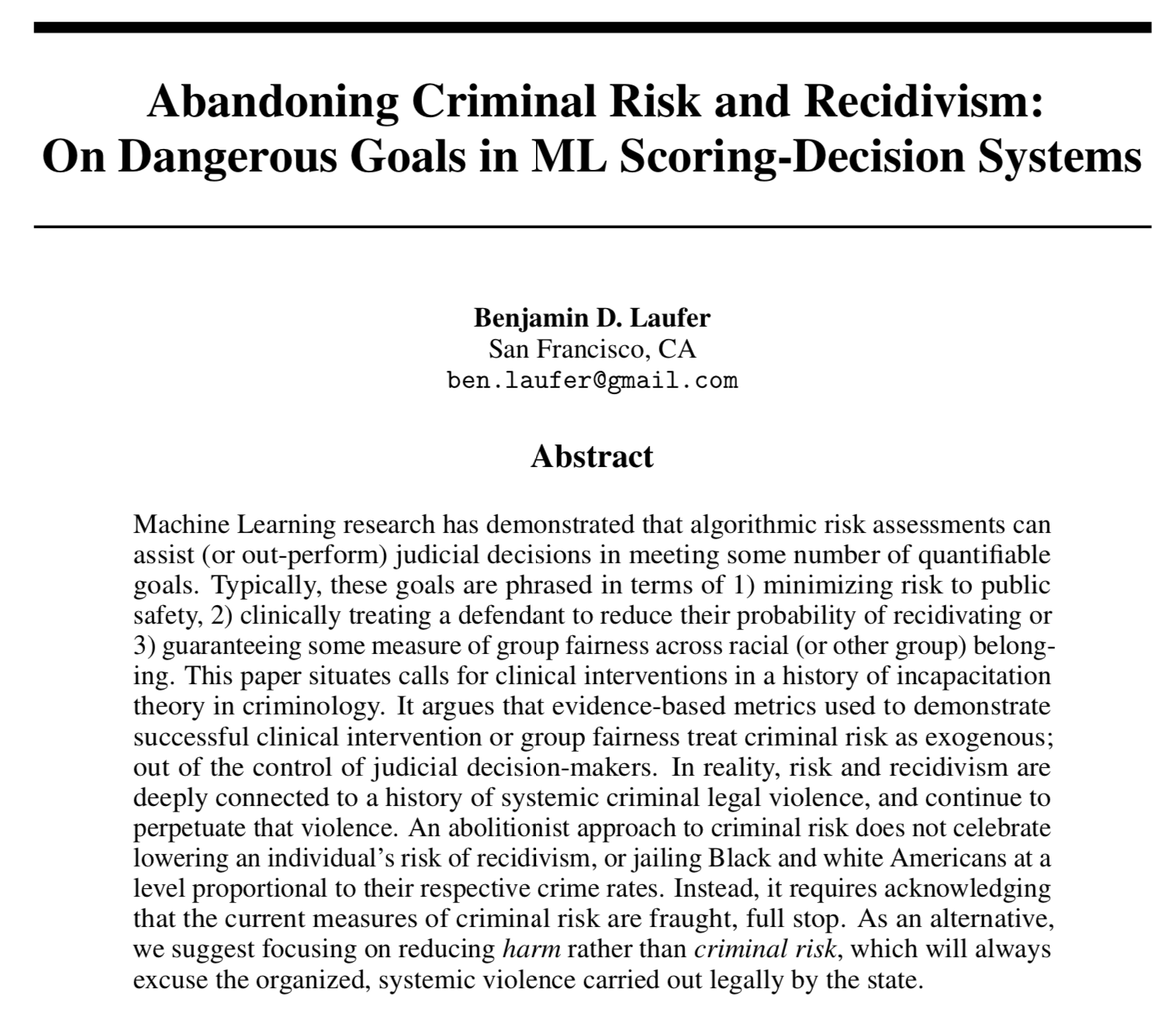

AI Governance Goals

Accepted to NeurIPS Resistance AI Workshop

High-impact machine learning algorithms, including algorithmic risk-assessments, are trained to predict or achieve some quantifiable metric. Examples include lowering criminal risk scores and recidivism rates. Are these goals the right goals?

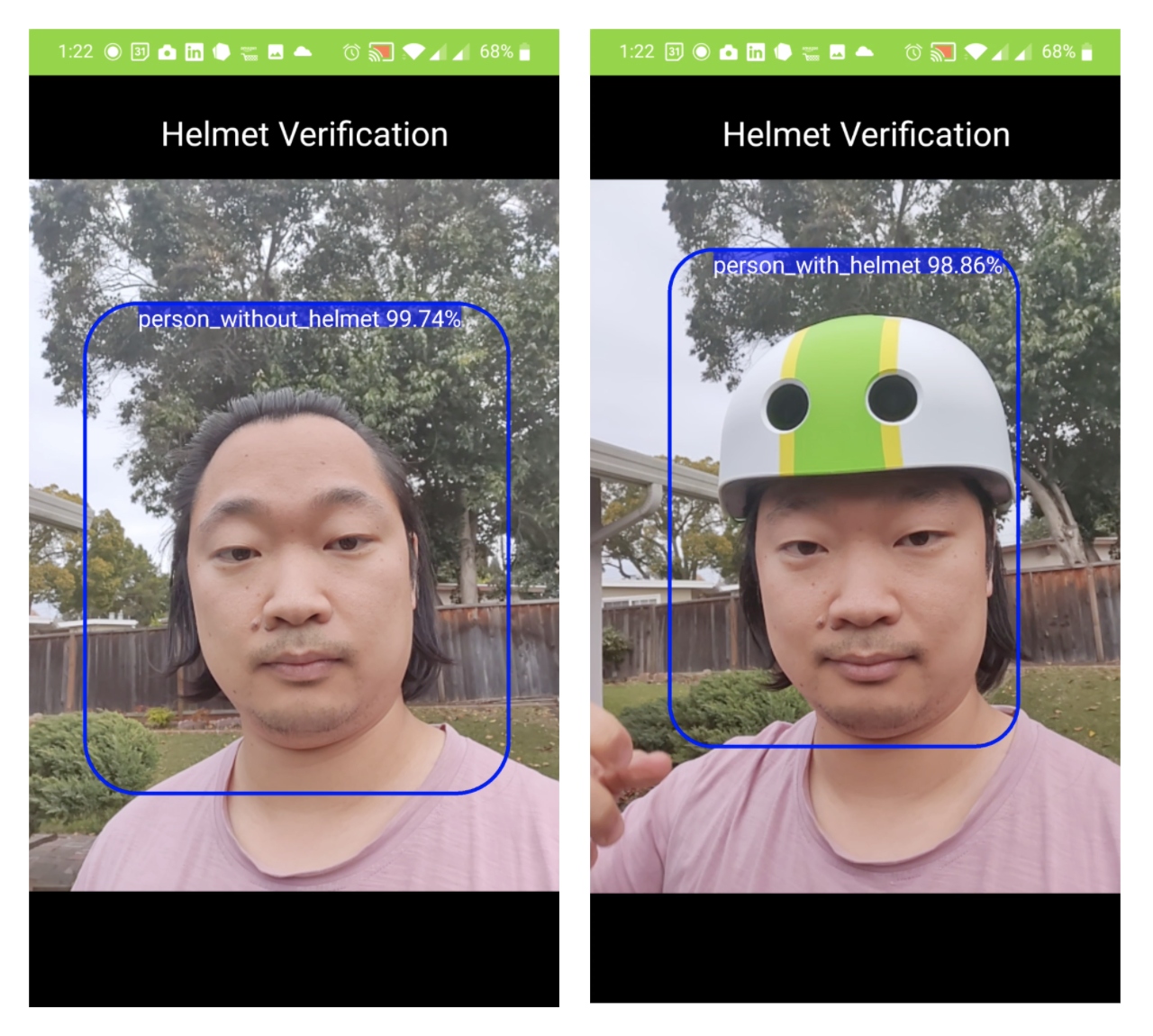

Computer Vision for Helmet Detection

Bike, scooter and moped sharing companies' biggest challenge is rider safety in car-dominated cities. To encourage riders to wear helmets, we built a computer vision model that can detect whether a rider is wearing a helmet in real time. We can use this feature to motivate helmet-wearing through discounts, the nudge effect, or strict requirement (in certain markets). We scraped images from Google, built a Convolutional Neural Network (CNN) using transferred learning with YOLOv4, and designed and implemented the UI for the Lime app. The model's classification accuracy on a test set was 97.55%.

1st place winner in Lime's company-wide Hackathon, December 2020.

1st place winner in Lime's company-wide Hackathon, December 2020.

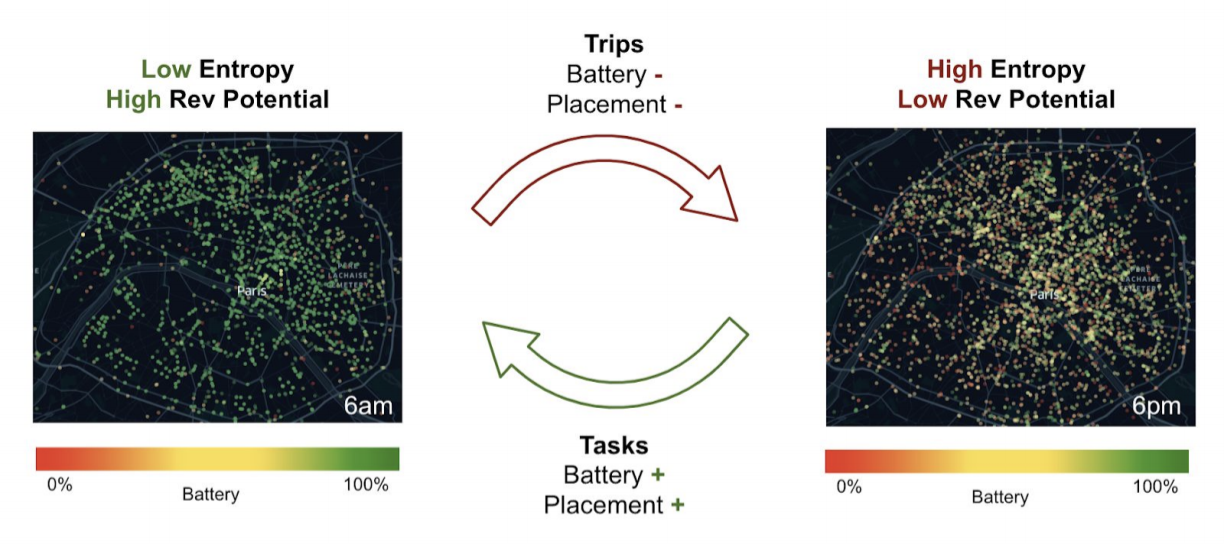

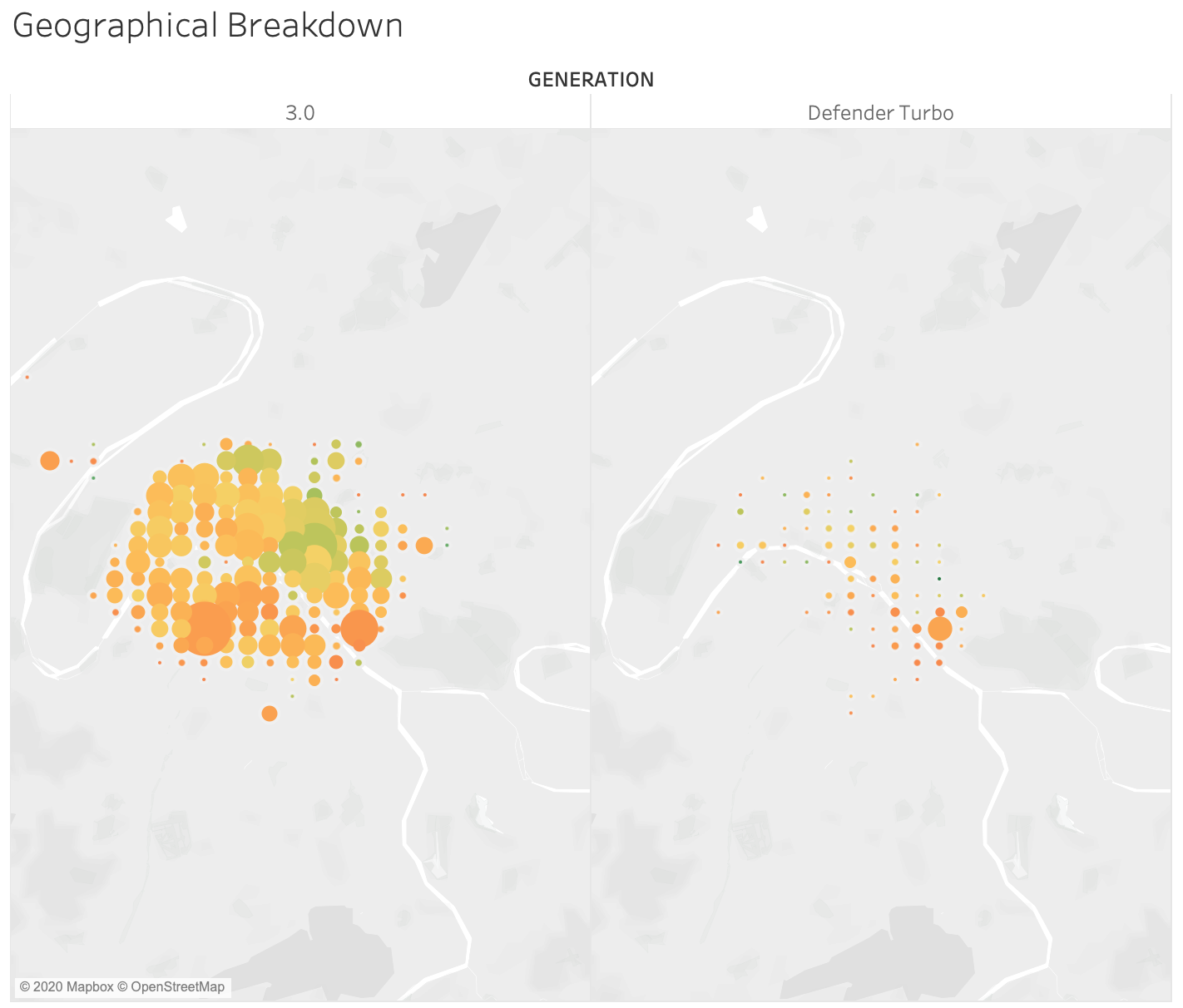

Bike and Scooter Fleet Optimization

Project advised by Dounan Tang @ Lime

Lightweight dockless vehicles are deployed at full battery in the highest-demand locations. Throughout the day, trips bring them to a lower-trip-potential state. When and where should Lime pick up vehicles to complete charging and rebalancing tasks?

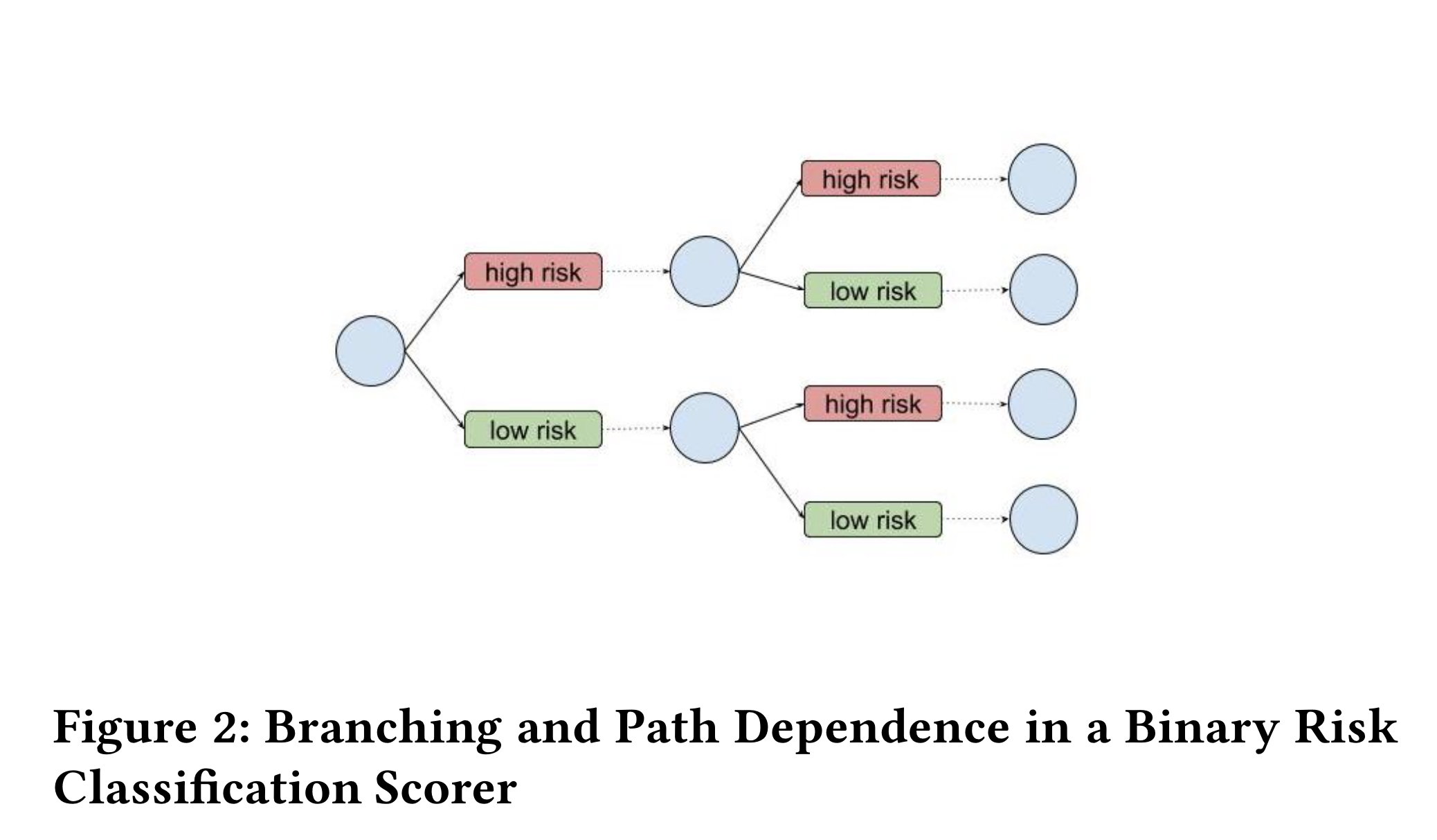

Feedback Effects in Repeated Criminal Decisions

Algorithmic risk assessment tools are widely used in the United States today to assist pre-trial and sentencing decisions. Current auditing methods are limited in scope, do not have standardized procedures, and (importantly) ignore feedback effects that arise from repeated decision-making. How do we create accountability?

Swappable Battery Technology for E-vehicle Sharing Systems

Ongoing work with Lei Xu and Jeh Lokhande @ Lime

Lime recently launched their first electric vehicles with swappable batteries, so that people do not have to bring vehicles to power sources in order to charge them. How much closer will this technology bring us to zero-carbon travel?

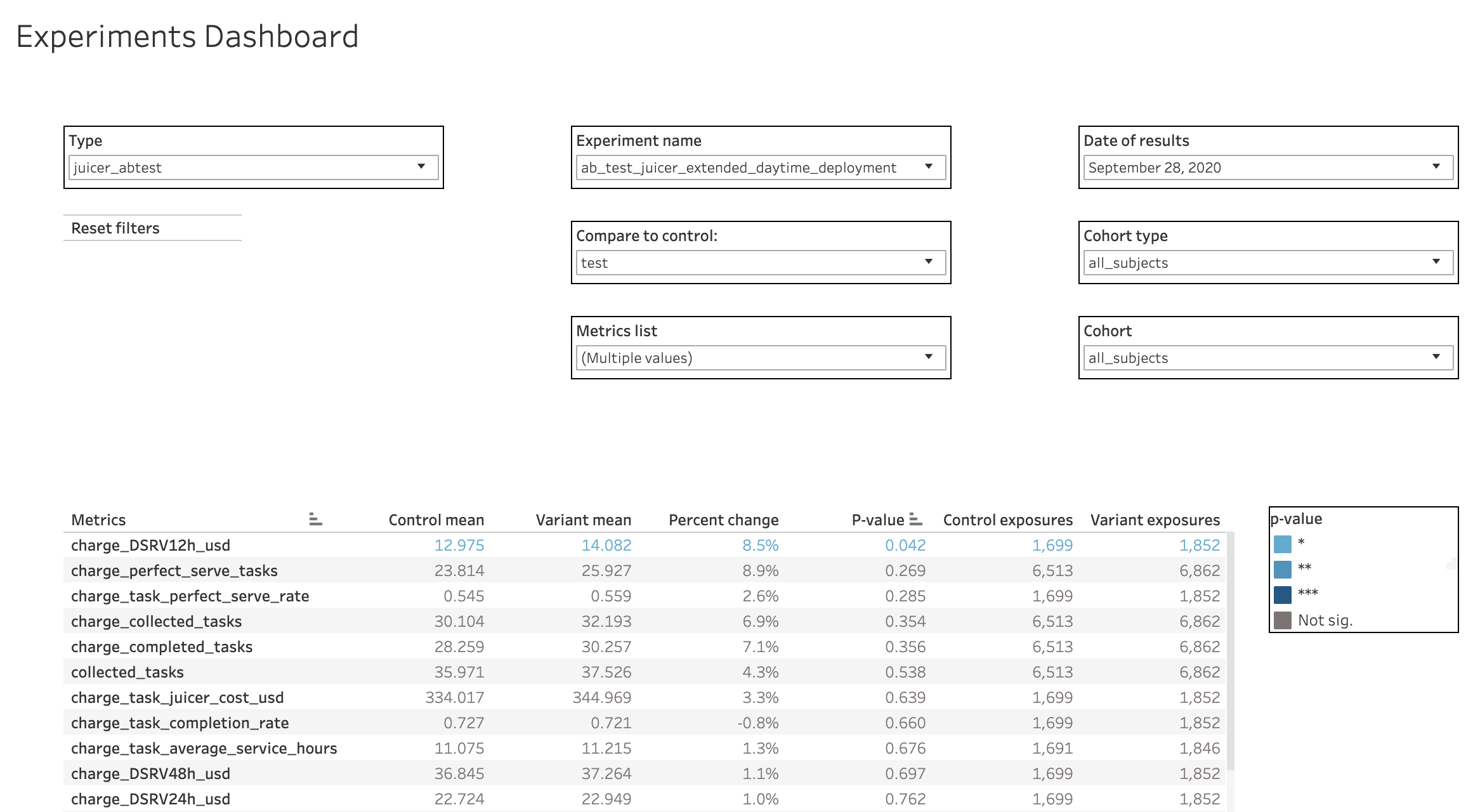

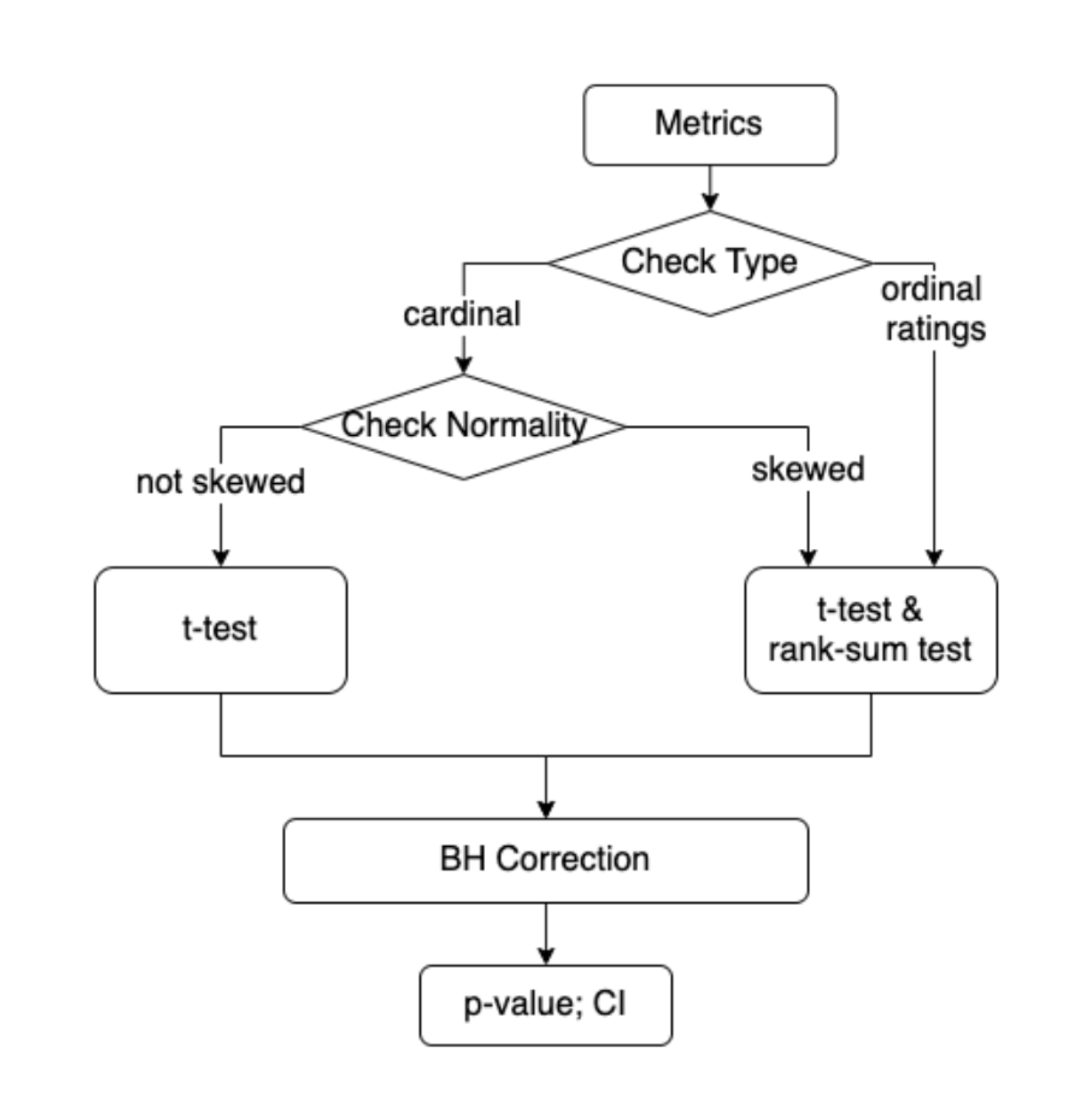

Automate Lime's A/B Tests

with Tristan Taru @ Lime

Supporting randomized A/B tests is fun but the work gets repetitve. I created an automated tool that performs relevant statistical hypothesis tests and reports treatment effects for a number of metrics. The tool supports randomized A/B tests on the vehicle, user, juicer, and switchback (region-day) levels. This was a "10x" project - it enabled much more experiment support and allowed local teams to launch their own experiments.

Lime's Experimentation Grand Strategy

with Kamya Jaggadish, Tristan Taru, Dounan Tang, Jeh Lokhande, and Siyi Luo @ Lime

To A/B or not to A/B? Lime supports hundreds of randomized A/B tests. It also supports causal impact studies and offline analyses when A/B tests are improper. I helped create briefs on how to design experiments and how to report and interpret experiment results.

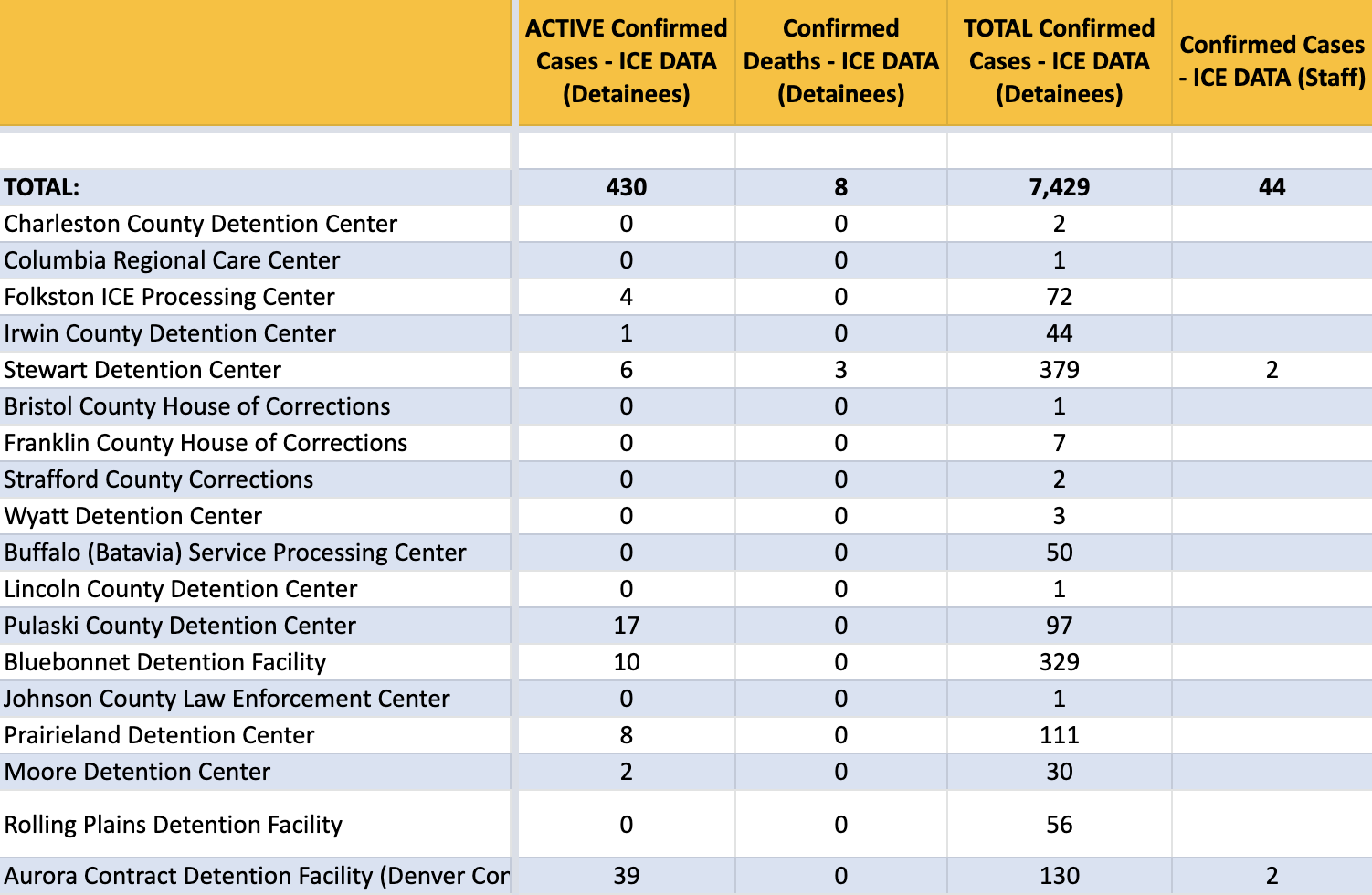

COVID Behind Bars Project

With Ishan Nagpal, Theresa Chang, and Sharon Dolovich @ UCLA COVID Behind Bars Data Project

People who spend time in Immigration Detention Facilities are becoming infected with COVID-19. ICE reports case and death counts, but also has transferred or released people with the infection. With UCLA's COVID Behind Bars Research Group, I worked to combine scraped ICE counts with transfer and release information, and systematically report these numbers for open-source research.

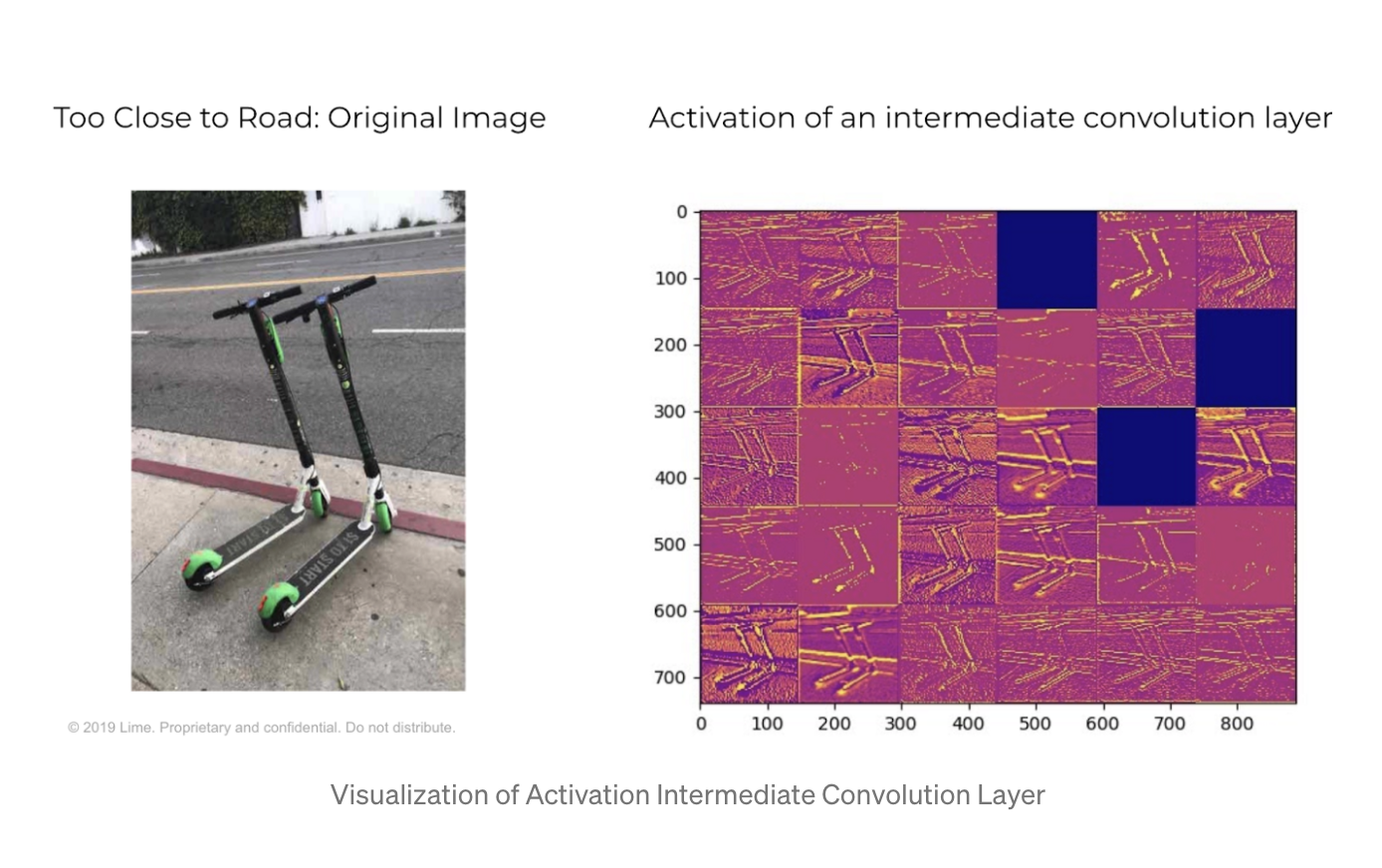

Computer Vision for Parking Compliance

With Andrew Xia and Yi Su @ Lime

When riders finish a ride or employees deploy a fresh scooter, they take a photo of the parking job. Cities need proper vehicle parking so that scooters are not in pedestrians' way. Using our giant photo dataset, we trained and deployed a machine learning model (CNN) to identify improper parking.

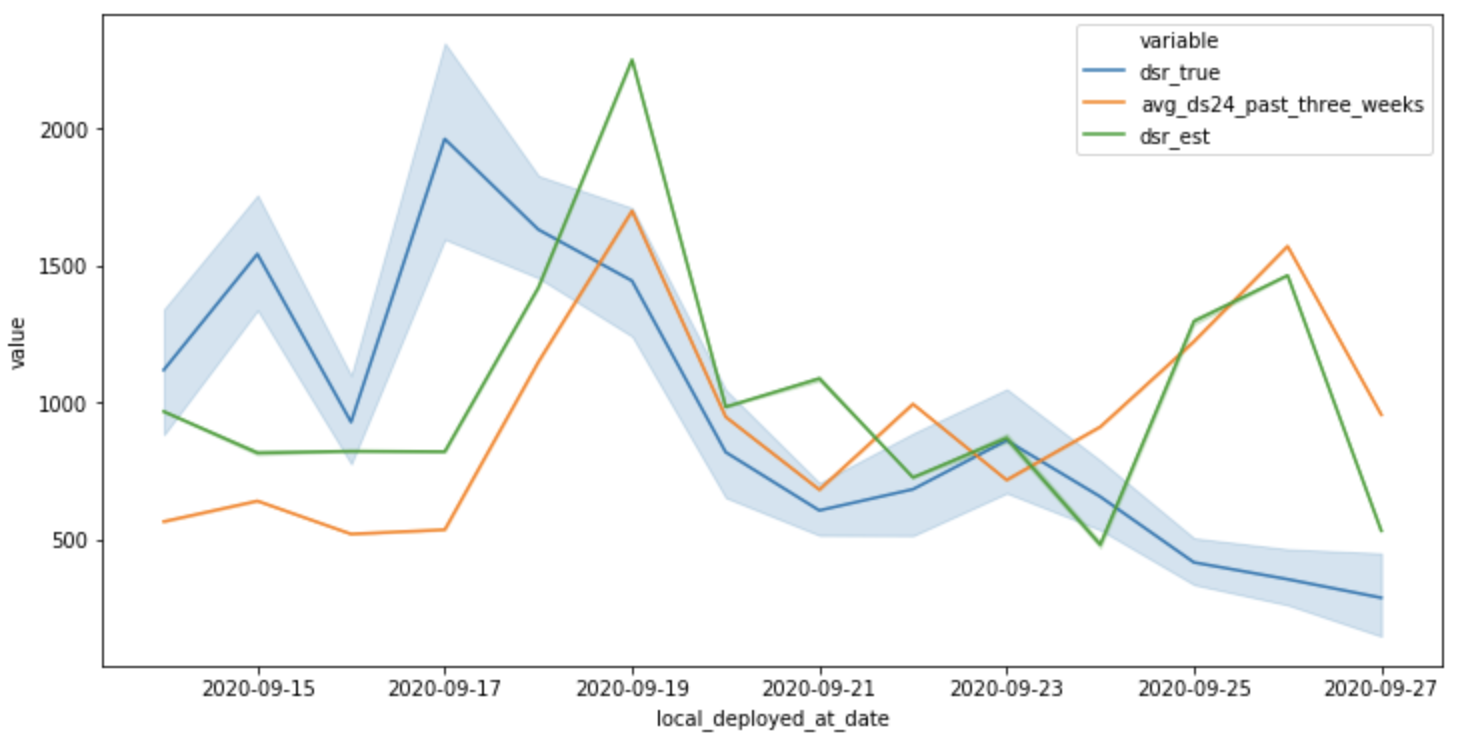

Bike and Scooter Demand Forecasting

Project Advised by Dounan Tang @ Lime

Most models at Lime use region-wide, 3-week historical averages to estimate a vehicle's revenue for the next day. We can reduce error by training an ML model with a few additional pieces of information (weather, vehicle model, seasonal trend, location, and auto-regressive terms).

When Twitter Trends Trend: Self-Referential Topics and Internet Virality

Personal project

Amid widespread BLM protests in 2020, many took to social media for "Blackout Tuesday" - a day where they posted only a black square. This event spurred significant debate not about any underlying political issue but about the squares themselves. A defining feature of the Internet is its self-referentiality. When are Twitter trends about events, and when do they become about the fact of their own trending status? I am getting familiar with the Twitter API using this topic as my guide.

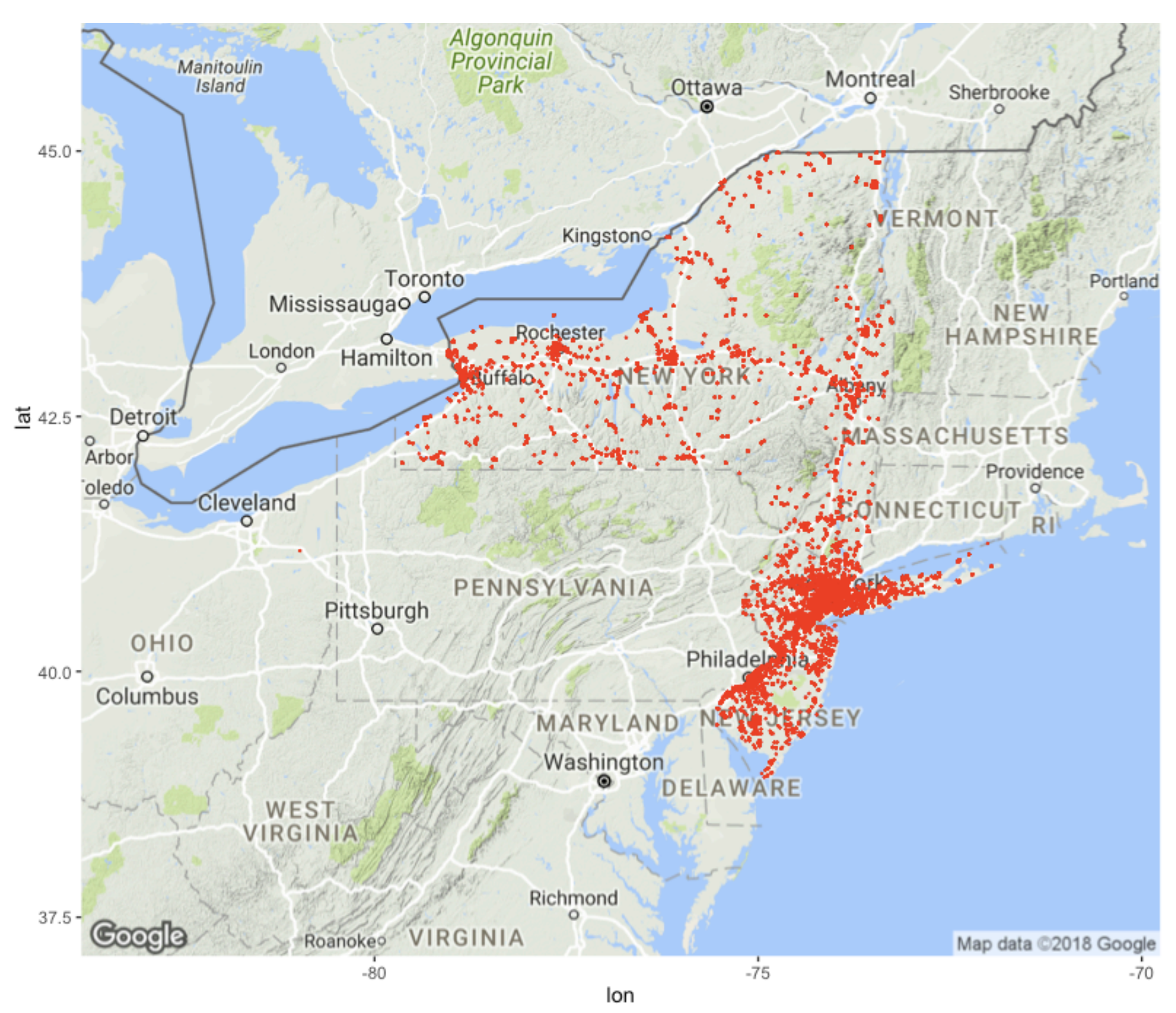

Compounding Injustice: History & Prediction in Carceral Decision Making

Senior Thesis Advised by Miklos Racz and Eduardo Morales @ Princeton

Senior thesis project at Princeton. I wrote on pre-trial and sentencing decisions in the U.S. criminal legal system. I used a combination of an original dataset (court dockets scraped from the Philadelphia court system), modelling techniques, and critical analysis to explore compounding feedback effects in the criminal decision process. The work won three graduation honors:

(1) Best undergraduate thesis in Urban Studies [announcement1, announcement2]

(2) Kenneth H. Condit Graduation Prize for Excellence in Scholarship and Community Impact [announcement]

(3) Departmental Distinction

(1) Best undergraduate thesis in Urban Studies [announcement1, announcement2]

(2) Kenneth H. Condit Graduation Prize for Excellence in Scholarship and Community Impact [announcement]

(3) Departmental Distinction

Ruminations on Media & Sensation

Project stemming from coursework, advised by Thomas Y. Levin @ Princeton

Gelato shaped like a flower. Slime videos. ASMR. How is the Internet changing the way we sense?

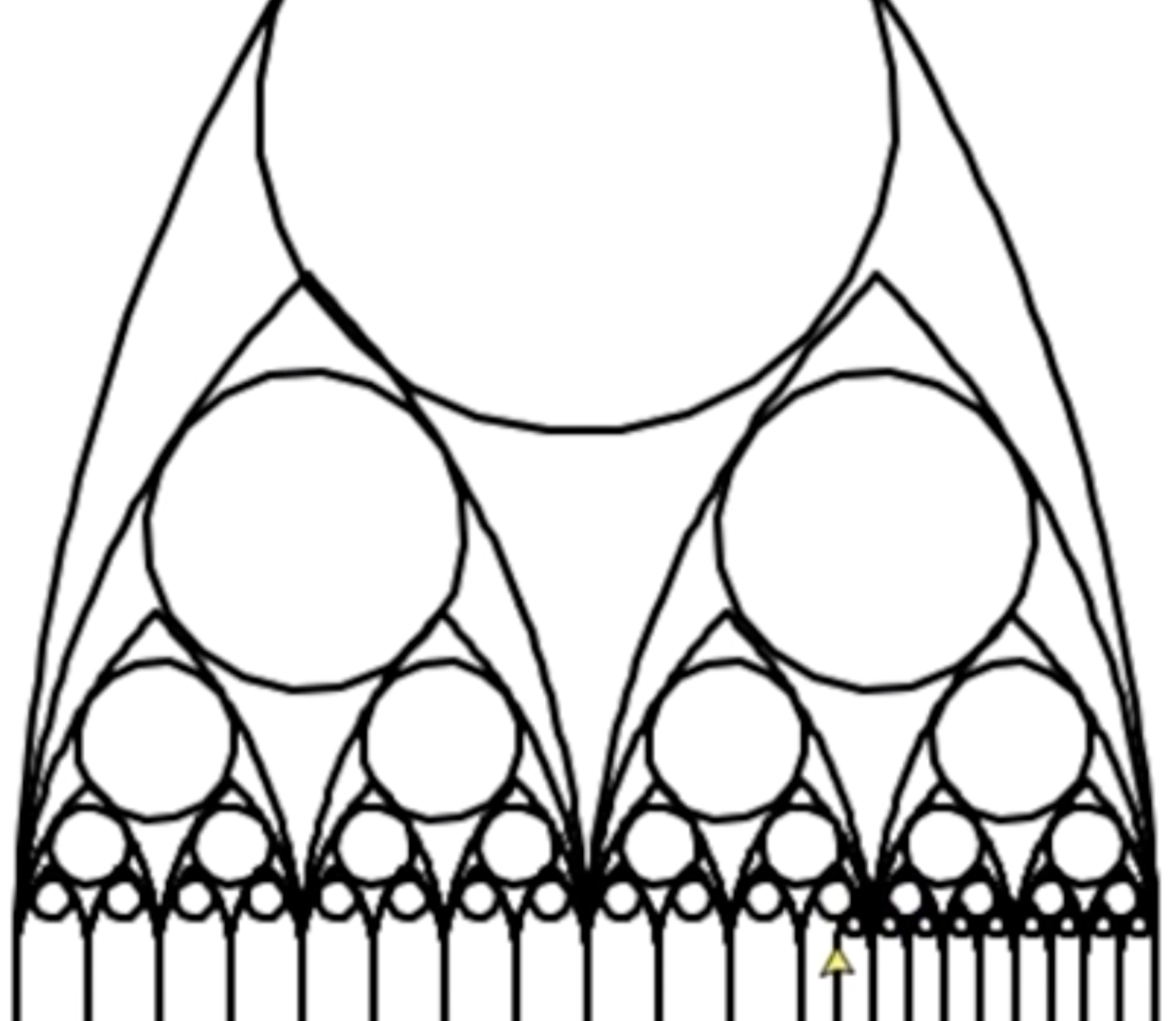

Recursion and Gothic Architecture

Personal Project @ Princeton

Much of gothic architecture was built before computers existed. However, gothic structures exhibit self-similarity on different scales. Mathematicians and Computer Scientists describe this as "fractal" or adhering to "recursive logic." Using just a few lines of code, I design relatively ornate elements of a cathedral.

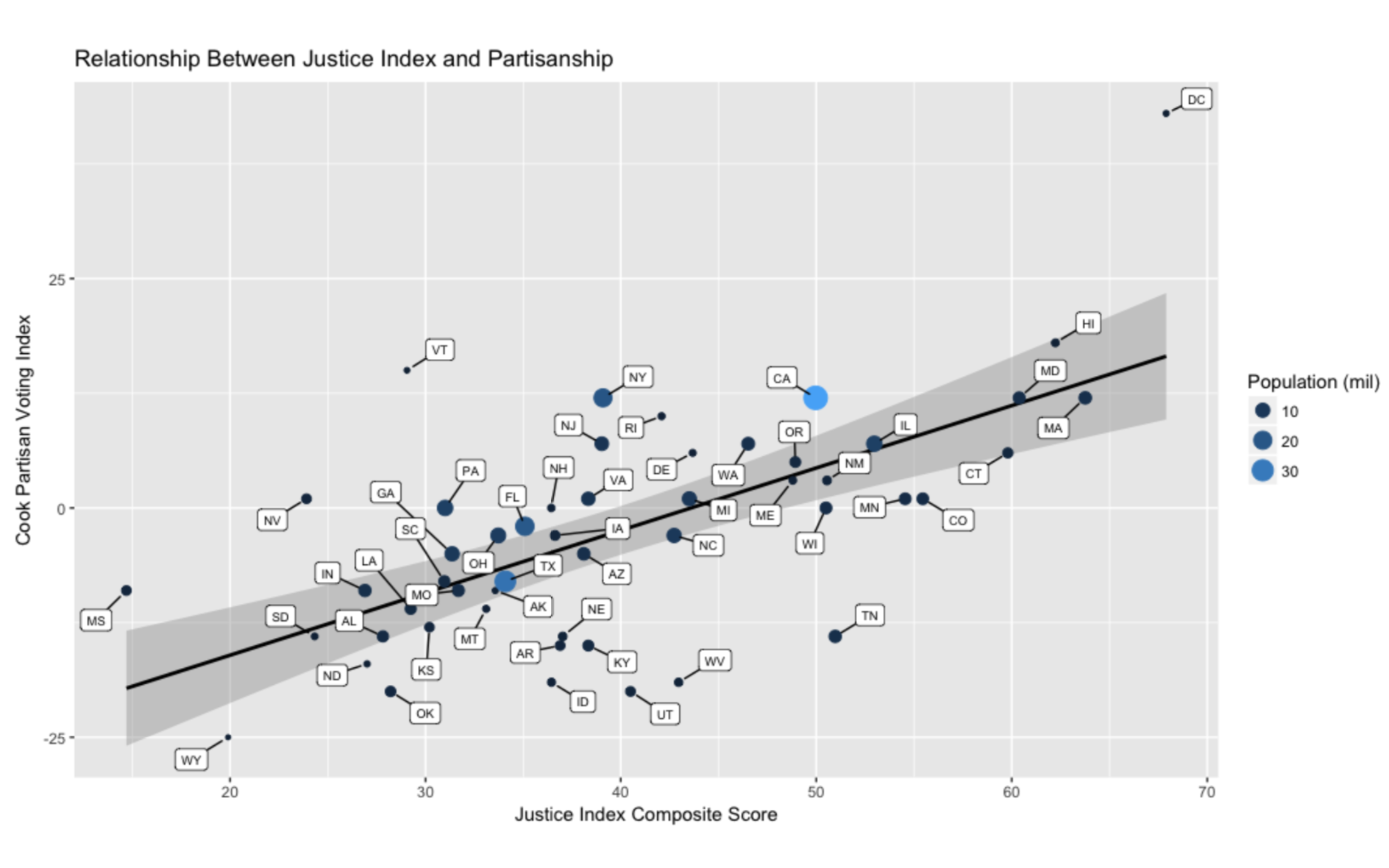

Justice Index, A2J and Partisan State Leanings

The National Center for Access to Justice (NCAJ) created the Justice Index, a quantifiable metric to track state civil legal initiatives and hold government accountable, a la Eviction Lab. Which states perform better than other states, and why? How do these metrics correlate with partisan state leanings, income level, and other census information?

Optimizing EPA Clean Air Act Enforcement

Research advised by Elahesadat Naghib and Robert Vanderbei @ Princeton and Daniel Teitelbaum @ EPA

Inspectors visit facilities to check Clean Air Act (CAA) Compliance either periodically or at random. Using public air quality data and historical performance, can an algorithm do better?

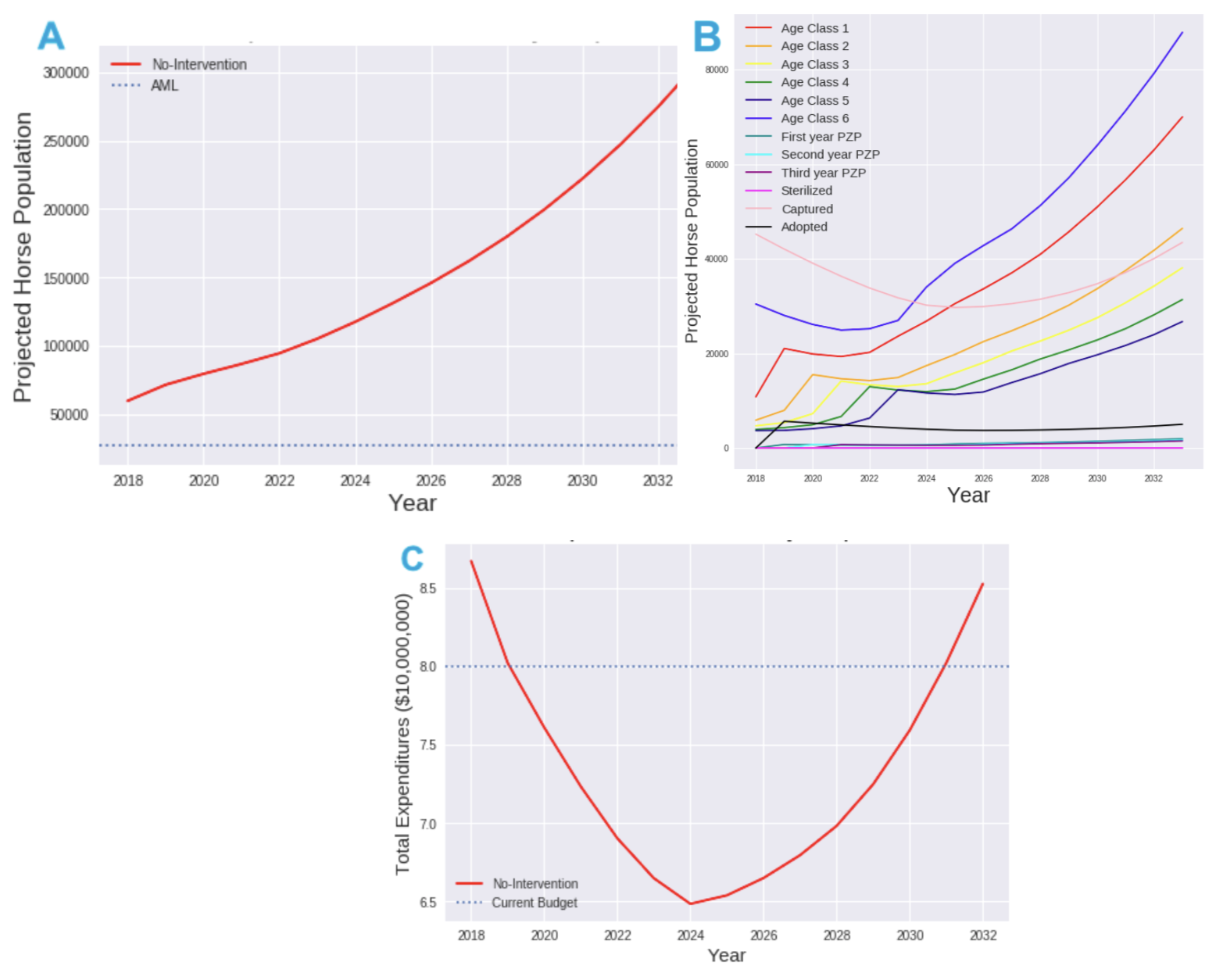

Controlling Horse Populations in the Western United States

Research project stemming from coursework, advised by Simon Levin @ Princeton

Feral horse populations are exploding, and the Bureau of Land Management refrains from culling because of its unpopularity. Instead, they gather large number of horses and keep them in barns. From a population dynamics perspective, we find that the current strategy is inadequate and simulate the impacts of a number of proposed alternatives.

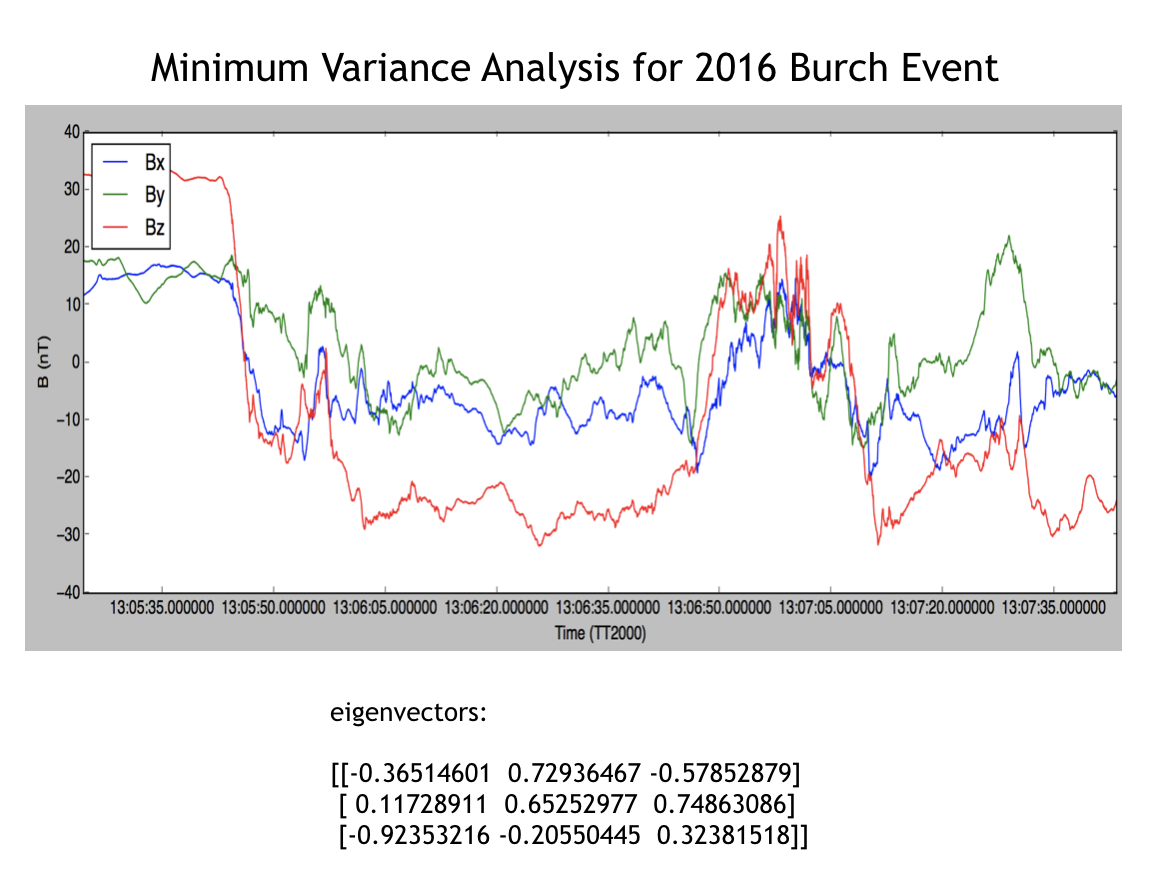

Statistical Methods for Detecting Magnetic Reconnection Events using NASA Spacecraft Data

Research conducted with Amanda Brown. Advised by Masaaki Yamada @ PPPL, 2016-2017

During an 8-month research assistantship at Princeton Plasma Physics Laboratory under Dr. Masaaki Yamada, I implemented a minimum variance analysis (MVA) technique to detect magnetic reconnection events in space. This work calibrated magnetic recordings from the four spacecraft launched by NASA's Magnetospheric Multiscale Mission. From the data I identified ~30 candidates as likely reconnection events, which were further analyzed by the lab. Understanding reconnection is a key part of developing nuclear fusion energy here on earth.

Writing

"Statistical Guarantees in the Search for Less Discriminatory Algorithms"

Accepted at CS & Law 2026, under review at Management Science, 2025.

"Anatomy of a Machine Learning Ecosystem: 2 Million Models on Hugging Face"

Revision at Proceedings of National Academy of Sciences (PNAS), 2025.

"Measuring Rule-Following in Language Models"

ICML 2025 Workshop on Assessing World Models, 2025.

"On the Economic Impacts of AI Openness Regulation: A Game-theoretic model of Performance and Openness Implications"

Advances in Neural Information Processing Systems (NeurIPS), 2025.

"The Backfiring Effect of Weak AI Safety Regulation"

Revision at Proceedings of National Academy of Sciences (PNAS), previously appeared at ACM Conference on Equity and Access in Algorithms, Mechanisms, and Optimization (EAAMO) (non-archival), 2025.

"What Constitutes a Less Discriminatory Algorithm?"

ACM CSLaw '25 [presentation], earlier versions appeared at NeurIPS RegML and NeurIPS AFME workshops [oral spotlight], 2024.

"Fine-Tuning Games: Bargaining and Adaptation for General-Purpose Models"

The Web Conference 2024 (WWW) [oral spotlight], 2024.

"Algorithmic Displacement of Social Trust"

Knight Institute, Symposium and Essay Series on Algorithmic Amplification and Society, 2023.

"Strategic Evaluation: Subjects, Evaluators, and Society"

ACM Conference on Equity and Access in Algorithms, Mechanisms, and Optimization (EAAMO), 2023.

"Optimization's Neglected Normative Commitments"

ACM Conference on Fairness, Accountability, and Transparency (FAccT), 2023.

"Are computational interventions to advance fair lending robust to different modeling choices about the nature of lending?"

NeurIPS AFT workshop, 2023.

"End-to-end Auditing for Decision Pipelines"

ICML Workshop on Responsible Decision Making in Dynamic Environments (RDMDE), 2022.

"Collective Obfuscation and Crowdsourcing"

KDD MIS2-TrueFact and ICML DisCoML workshops, 2022.

"Four Years of FAccT: A Reflexive, Mixed-Methods Analysis of Research Contributions, Shortcomings, and Future Prospects"

ACM Conference on Fairness, Accountability, and Transparency (FAccT), 2022.

"Accountability in an Algorithmic Society"

ACM Conference on Fairness, Accountability, and Transparency (FAccT), 2022.

"Abandoning Criminal Risk and Recidivism: On Dangerous Goals in ML Scoring-Decision Systems"

"Compounding Injustice: History and Prediction in Carceral Decision-Making"

Undergraduate Thesis, Princeton, April 2019.

"Data Analytics and Machine Learning for Environmental Protection: Targeting Air Inspections"

Junior Research Paper, Princeton ORFE, January 2018.